Mobile Operator Benchmarking Reports: What do they mean?

On February 6 2020, The Mobile Video Industry Council and Enea Openwave launched the industry’s first bite-sized thought leadership series for mobile operators. These 15 minute “microcasts” will cover a diverse range of topics that will impact mobile operators in 2020 and far beyond.

The subscriber is at the heart of the mobile ecosystem. Measurement companies like Tutela, RootMetrics, P3, Ookla and Opensignal use scientific criteria to measure each operator’s performance to understand who is best suited for the average user. These operator rankings can directly impact an operator’s business, but, importantly the test criteria differ from one measurement company to the next, and there are no standards. So what do we make of these rankings? Let’s look at some of the specific questions asked on the Microcast:

Why do performance measurement companies rank mobile operators?

Operator benchmarking helps consumers identify “the best carrier” and over time highlights the different characteristics that each carrier brings to the marketplace to better inform consumer choice. These “rankings” can be a key instrument for an operator’s marketing department. Being ranked “Number 1 Operator” by an independent test house is a key headline that can make the difference to the end user standing in a phone store wondering which network to choose. In short, whatever you think of measurement companies, this impacts the bottom line.

Measuring and understanding network performance is obviously critical. But rather than just looking at overall rankings, measurement companies also examine relative performance of operators. So not just who’s in first or second, but what is the gap between them and how significant is the difference? Furthermore, what kind of different use cases are being impacted by those differences?

Exactly how are the measurements conducted?

There are key differences in the way these tests are carried out by different measurement companies.

For example, Rootmetrics were keen to stress that their measurements are always conducted using off-the-shelf handsets, without any modifications to their boxed state. The tests are conducted over a range of random locations to ensure that there is no bias, and exactly the same tests are conducted on different carriers at the same time and place for a completely fair comparison. Altogether, their testing aims to deliver a scientific base and a statistically accurate view of real-world performance from a consumer’s point of view.

However, other measurement companies (such as Tutela) use anonymized crowd sourced information and collect data from millions of real-world users, as opposed to a small number of dedicated testers. This data comes from a variety of handsets, from flagship to mid-level devices. These tests are measured against the most frequently used Content Delivery Networks (CDN) and the companies use a fixed file size that emulates the most common consumer use cases. By looking at the performance of actual users, and their actual phone tariffs, against the CDNs that underpin the most popular services –measurement companies look to answer questions such as how quickly does a popular webpage load on a specific handset, tariff and carrier network?

Both approaches have their merits – but the point is that they are very different.

Do ranking companies measure video performance on operator networks? How?

Video is now a core component of a subscriber’s QOE and measurement companies do address video. They adopt a user experience-driven approach to gauge the QoE of video streaming and downloading. For example, video tests that are based on crowdsourced data can evaluate different devices and judge how they perform on mobile networks vs WiFi when videos are downloaded or streamed from CDNs such as Netflix, YouTube and Facebook.

While running those video tests, the data points can reveal critical aspects of the end user experience, such as:

- How long did the video take to buffer?

- Did playback stall at any point?

For operators, in video QoE terms there are two further aspects worth bearing in mind that measuring companies evaluate:

- Quality of delivery versus quality of picture ie buffering versus resolution is sometimes overlooked. Operators have found that subscribers prefer a consistent delivery of standard definition – rather than excessive buffering while delivering High Definition video. By running tests against popular streaming services globally on a 24/7 basis, measurement companies can inform operators about the “acceptable quality” from subscribers. (Previous testing undertaken within operators also indicates that most users simply cannot tell whether a video they are watching on a phone is low-definition, medium-definition, or high-definition).

- Impact of calls and texts is another critical criterion. Ranking companies also evaluate how a video performs on a device when it receives calls and texts – while running video streaming.

So, with the demand that video streaming places on mobile networks, operators must optimize their networks and CDNs to provide an optimal, consistent experience – which may well be in a low definition. The most obvious example is the move in many countries to offer a high unlimited plan for video usage – such as T-Mobile USA’s Binge On plan that was first introduced in 2016 – which encourage subscribers to watch Standard Definition streaming over cellular networks for an all-in price.

What about usability metrics for video, such as video watch times?

Unsurprisingly all measurement companies focus on “network metrics” – feeds and speeds. But when it comes to video, what about “usability metrics” such as numbers of videos played and video watch times? These also give an indication of an operator’s performance – albeit more subjectively.

Both of our guests responded in a similar manner: they agreed that video watch times can reveal a wealth of data, but there are privacy and commercial issues that ranking companies have to carefully consider, so they do not tend to focus in on these usability metrics. (However note that measuring “watch time” is not the same as monitoring actual videos watched).

A further reason for not measuring watch times was given i.e. that applications such as Tick-Tock can soar in popularity overnight for a few hours and then disappear from the radar. So if video watch times were measured they could be highly impacted by intermittent effects.

Ranking companies do conduct tests over Wi-Fi to see how long-term video plays across mobile networks vs fixed broadband. This can provide an accurate assessment of congestion and QoE across different delivery mechanisms as well as different applications such as Netflix, YouTube and Hulu.

The popularity of smartphone and tablet screens for watching video means that for operators and subscribers, video performance is arguably the most important consideration. And there are so many different streaming applications which all behave differently. On top of that, new services like Apple TV+, Disney+ and a host of others will come online during 2020 and beyond. The content providers, the operators and the consumers all need to understand how these will perform and how they can be delivered consistently.

Why is there no global standard for measuring and ranking operators?

Both guests commented that their industry has struggled with the lack of standards and common methodologies. Some measurement companies are working actively with regulators globally to use crowdsourced data to enhance and extend their existing capabilities in mobile broadband measurement and assurance. But the conclusion here is that the business of measuring operators is nascent and, as yet, has no agreed standards.

This explains why some operators can be ranked differently by individual measurement companies – put simply it is down to the differences in methodologies and criteria. For example, large urban zones in say China, Japan, the UK or the US will differ – the same number of potential users might be concentrated in areas of vastly different sizes and topography. This will be reflected in different results as the criteria and tests change across ranking companies. However both companies being interviewed commented that they would expect an operator who is ranked highly by any of them, to be ranked highly by ALL of them.

As a footnote, while the industry and consumers alike often equate the fastest download speeds as “the best” – this approach is overly simplistic. No one metric can capture the usability of a complex service like this. And measurement companies recognize that they need to match the ubiquity and the appeal of download speeds with other metrics and methodologies that focus more on the user experience as the true measure of performance. Of course, agreeing on this is easier said than done.

Are there new emerging metrics for 5G compared to 4G LTE?

The arrival of 4G was the key enabler of streaming video over mobile networks. The advent of 5G will build on that by adding new use cases of video such as Augmented Reality (AR) and Virtual Reality (VR) – plus of course a new range of low latency applications in industrial settings. This means that 5G performance measurements will be use-case driven rather than based on standardized network metrics. This is a new and as yet undeveloped area.

Measurements would include aspects such as consistent VR experiences. How often were there failures to deliver a packet with a certain latency tolerance etc? Network measurements in 5G will of course remain important, especially as they will also need to cover the performance within specific slices of the network.

In summary

Operator measurement companies are fulfilling an incredibly important role. Their reports are highly regarded and can in fact be more useful than operators’ own measurement reports. Being ranked #1 provides an operator with huge marketing clout, but equally not being #1 provides valuable feedback to carriers on where exactly their problems lie.

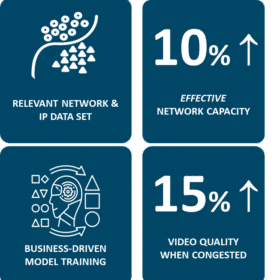

End to end, real-world, network testing has always paid dividends. It is not just about measuring the radio part of the network; it is about delivering a consistent experience for users by measuring the end-to-end performance. Operators can then look to solve problems and deliver service improvements without having to upgrade thousands of cell sites.

And that is why in the ultra-competitive wireless landscape ranking companies such as Tutela and RootMetrics play a pivotal role. It is not just about being first – it is about gaining a real commercial competitive edge advantage that places you at the top of the game among subscribers and stakeholders.

Watch the Microcast here – 15 minutes